Large Language Models (LLMs) don't need any introduction. They are transforming how we view and use search, knowledge retrieval, information extraction, and many other areas. Specific model names such as GPT, LLaMA, Gemini, PaLM, Claude, and others have quickly become household names in tech circles. LLMs already provide tremendous value for people in their work and daily lives.

Still, many companies need help making LLMs available for their employees due to concerns about sharing internal data with external services and a lack of knowledge about self-hosting a model. Reliably running an LLM in production requires expertise in the area of LLMOps, which is the name for "machine learning operations (MLOps) for large language models." We have written extensively about effective MLOps in the past. In brief, the goal is to run models in production reliably, meaning in a way that makes users happy and generates sustainable value. We do this using the concepts from the established DevOps and Site Reliability Engineering (SRE) fields.

We have also previously written about LLMs for life sciences, where we explained vital concepts such as Generative AI, Foundation Models, LLMs, and Artificial General Intelligence (AGI). We highlighted challenges such as technical expertise, data quality, ethical and legal issues, and continuous delivery and emphasized the importance of MLOps and Site Reliability Engineering (SRE). We also outlined how Generative AI and LLMs can be leveraged for topics such as drug discovery, biomarker identification, and image analysis.

The current blog post contains two large sections. In the first one, we address how to use a large language model for your company and what you need to consider. In the second part, we will pose a series of questions you should be able to answer if you want to run your own LLM reliably in production.

What are my options for using an LLM for my company?

Consider several approaches to explore the value an LLM can bring to your company. The first big decision is whether to use an existing online service or set it up yourself (potentially with the help of external partners) within your company network. The second important decision is how you will give the language model access to your private data so that it can provide maximum value. Each approach has unique advantages and challenges; we will briefly summarize them here.

Use a Service vs. Self-Hosted

Using an existing LLM service such as OpenAI's ChatGPT or Anthropic's Claude is easy and convenient, but has the significant disadvantage that you lose complete control over your data. The technical overhead is minimal, the APIs are user-friendly and well-documented, and the service providers offer customer support. However, as the data will leave your network, this is not an option for businesses handling sensitive information. Self-hosting gives you complete control over your system and data, ensuring privacy, flexibility, and control over updates. Of course, this approach does require a certain amount of technical expertise and resource investment.

Out-of-the-Box vs. Fine-Tune vs. RAG

Several options exist regarding the model's customization level and interaction with your data. Note that both the service and the self-hosted approach can provide these options (although some services do not offer customization).

- Out-of-the-Box: This is the most straightforward approach, where you use a service without customization or deploy a pre-trained model without changes. This method is the quickest way to get started. However, it does not incorporate specific knowledge about your business because the model has (presumably) never seen your internal company data. It requires minimal technical effort but might provide insufficient value if not optimized for your specific tasks or industries. For example, banks cannot allow customer data to leave their internal networks. Prompts asking about their customers therefore will not return useful results as the model has never seen that information.

- Fine-tuning: This is the classical approach of incorporating specific knowledge from your data into the model by training (i.e., fine-tuning) a pre-trained model on your dataset. It can achieve the best results but requires a certain amount of resource investment related to time, infrastructure, and expertise. Fine-tuning is a good option if you want to specialize your model for a specific area and the required information is publicly available or based on internal data that is unlikely to change or be deleted. For example, it makes sense to fine-tune a model to be used as an assistant for general legal research or financial services. However, it is less favorable to fine-tune an LLM on private customer data or quickly outdated information.

- Retriever-Augmented Generation (RAG): This is the modern approach, enabling the model to integrate external information to generate the response. Implementing it is usually more manageable and less costly than fine-tuning the model while offering excellent performance. In a nutshell, the process works in multiple steps. First, information related to your prompt is retrieved from a separate knowledge base. Then, the prompt and an enhanced context (the query result) are passed to the language model. This means the model can use information in your internal company data without explicitly being trained on it. RAG excels in scenarios where access to the most current information, which might change frequently, is crucial. It can always retrieve data from the independently updated internal knowledge base. A practical application would be an assistant for banking support staff, helping them provide personalized financial advice by accessing up-to-date customer finance histories and related data.

As always, the best option depends on the organization's specific needs and willingness to invest and experiment. The RAG approach will likely lead to good value for many as it can strike a good balance between the quality of results and the required effort for implementation. As awareness and concerns about privacy grow, some users will request the deletion of some of their data. In such scenarios, the RAG approach offers a straightforward solution. The data can be deleted from the knowledge base without impacting the model. With the fine-tuning approach, accommodating such privacy requests is challenging. Ultimately, completely removing the influence of that data would require restarting the fine-tuning process from the beginning without the deleted data.

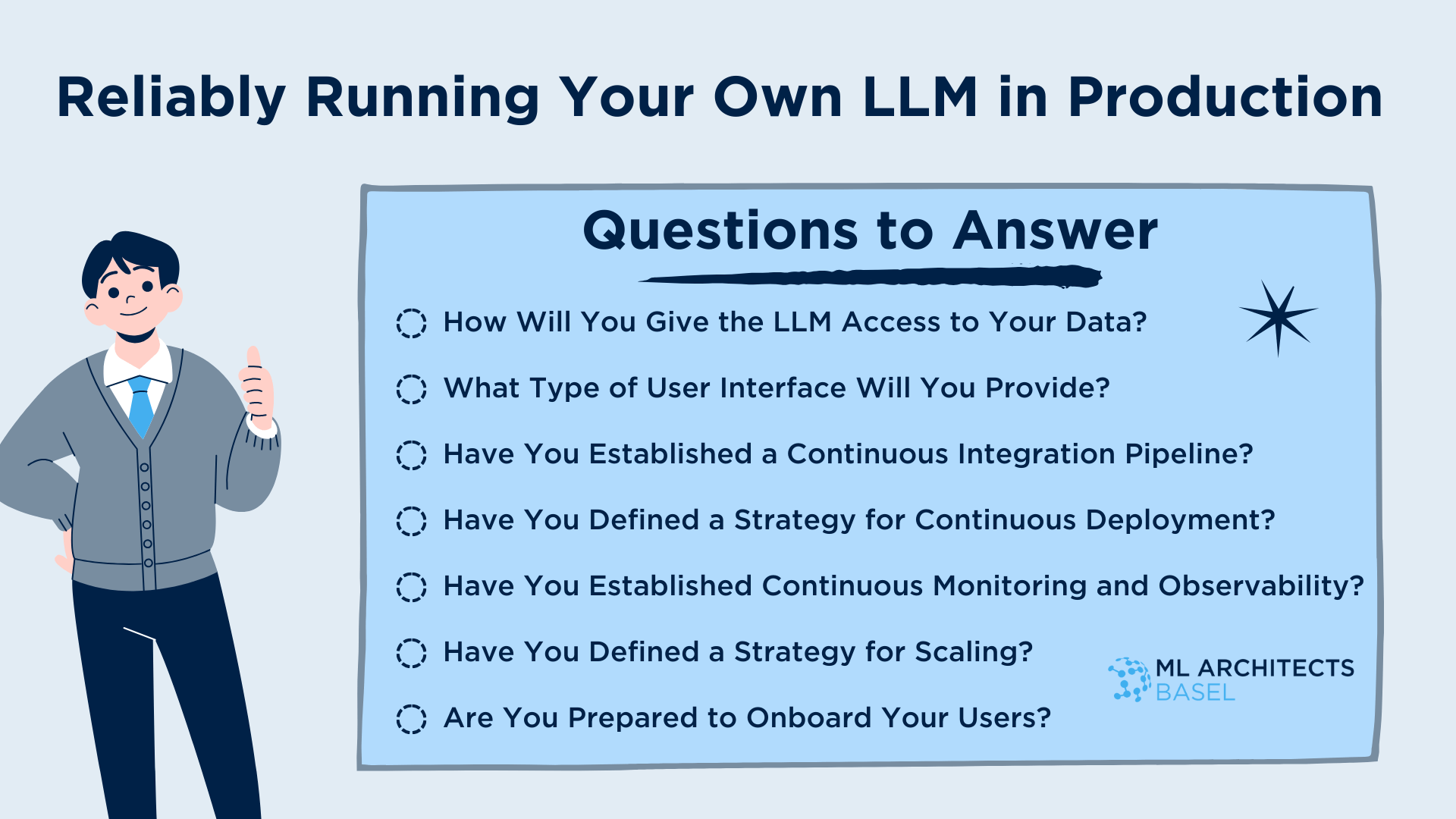

What should I think about to run my own LLM in production reliably?

We will now assume that you have decided to develop an LLM service for your business by self-hosting an open-source LLM. The first decision will be which exact model you want to run, but we will not cover this in this blog post. The model development field is highly active, and the best model choice might change quickly. We are more concerned with the fundamental issues you must address to run any LLM in production reliably. The questions we raise here are independent of your chosen model version.

We are talking about the fields of MLOps (or, more specifically, LLMOps), which we have previously written about extensively. Nevertheless, running a language model in production also comes with its own set of unique challenges. In this second part of this blog post, we will highlight some of them by going through a series of questions one should be able to answer to ensure reliability.

How Will You Give the LLM Access to Your Data?

Suppose you have decided to optimize the outputs of your LLM by giving it access to your data (rather than just using an out-of-the-box version). As previously explained, you have two main options: Fine-tuning the model or using retrieval-augmented generation (RAG). This choice can fundamentally alter how you put your model in production.

If you decide to fine-tune your model, it is crucial to set up reliable data and model (re-)training pipelines (have a look at our digital highway for many of the essential aspects of these). On the other hand, if you opt for an RAG approach, your priority shifts toward building and maintaining a robust knowledge base. The information in the base must be up-to-date and as complete as possible, and the retrieval system should be efficient and reliable.

What Type of User Interface Will You Provide?

Thinking about how your users will interact with the model is essential. When setting up your application pipeline, whether for a chatbot or a plugin for a browser or IDE, it is vital to implement reliable build, test, and deploy cycles. Furthermore, if one of your goals is to offer the LLM as a service, a robust API needs to be provided. Incorporating muli-model backends can significantly enhance the value of your service. For example, your service could use different models for code completion and text generation while sharing the same knowledge base.

Have You Established a Continuous Integration Pipeline?

A continuous integration (CI) pipeline with a fast feedback loop is crucial for allowing rapid development while maintaining the reliability of your system in production.

Although testing and performance evaluation for LLMs represent unique challenges, they are all the more valuable. While testing the responses of LLMs is not a solved problem, the state-of-the-art is rapidly evolving. Solutions such as evaluation frameworks are in development (see also Microsoft's recent version). Ultimately, the models should be evaluated and tested regarding accuracy, relevancy, recall, hallucinations, bias, and any other chosen metric.

Testing LLMs can be approached in various ways. One effective method is to prompt the model for a straightforward, deterministic response. For example, ask it to “reply only with a simple YES or NO” to a clear-cut question or “choose from 1, 2, or 3” in a well-defined multiple-choice question. To minimize randomness, most models support setting a so-called temperature parameter to zero, which typically leads to more deterministic responses. It is also beneficial to repeat the exact prompt multiple times and verify that (most) outputs align with expectations.

Another strategy involves asking for less deterministic responses, which you can easily verify. A classic example is requesting a summary of a longer text and checking whether the response is shorter than the original text. Assessing the model's self-consistency can also be highly valuable. For instance, ask the model to rephrase a paragraph and then query the model to determine if the input and output convey the same meaning (perhaps prompting for a straightforward YES or NO answer as explained above).

Finally, you can employ more domain-specific tests. If the LLM is designed to generate code, you can test that the code compiles, runs, and produces the expected result. A general best practice for testing LLMs is to always ask the model for an explanation when outputs deviate from expectations because this will significantly benefit debugging.

Have You Defined a Strategy for Continuous Deployment?

The second critical phase after CI is continuous deployment (CD), which involves making efficient and reliable updates to your production environment. This process should be automated as much as possible. However, in many cases, the release might still involve a manual approval (which should be as seamless as possible - ideally just a single click). You should work with staging environments and establish a release strategy (e.g., canary release or feature flags) and a robust rollback mechanism.

Have You Established Continuous Monitoring and Observability?

Once your model is running in production, it is crucial to know what the state of your system is at any time and if this state satisfies your users. Site Reliability Engineering (SRE) is the modern way of approaching this.

The first step should be defining what a "happy user" means for your service. For example, users will expect that they can always access the service (availability), receive responses in a reasonable amount of time (latency and response time) and that the responses are correct, relevant, and complete. To measure and manage these metrics and the trade-off between reliability and the development of new features, we recommend implementing Service Level Indicators (SLIs) and Service Level Objectives (SLOs).

As we have alluded to in earlier sections of this blog post, measuring the quality of the responses from LLMs is tricky. One straightforward way to address this is to ask the user to provide feedback for each response. For example, a thumbs-up/thumbs-down or star rating can provide invaluable signals about the quality over time. You can obtain other valuable indicators for the state of your LLM service through metrics such as requests per second, response latency, output tokens per second, length of conversations (for chatbots), or the percentage of times a content filter intervened in responses.

MLAB and our partners have previously written about Observability for MLOps and Effective SRE in more detail. Feel free to reach out anytime if you need help implementing reliability for MLOps.

Have You Defined a Strategy for Scaling?

Be prepared for fluctuating user demand and ensure availability and low response times during peak times. Allocate resources on current and projected demand and scale back resources during quiet periods to save costs. For example, during weekends, the user traffic might be low. The primary unique challenge of LLMs is that they usually require GPUs for acceptable performance. These are vastly more expensive than CPUs and less available, with even large cloud providers sometimes facing shortages of GPUs. Mitigating this risk might mean not relying on a single type of GPU, reserving instances for peak times, or never scaling back too far. In general, manage infrastructure as code (IaC), which simplifies scaling up or down and ensures consistency. Load balancing and redundancy are other essential pieces for reliably scaling your service.

Are You Prepared to Onboard Your Users?

User onboarding is critical, given the unique challenges and potential risks associated with language models. Users must know the capabilities and the limitations before using the service. One of the most critical aspects in this regard is guidelines around data sharing. For example, Personally Identifiable Information (PII) might only be allowed as part of prompts if you built your service to handle it specifically. Sometimes, the model might generate incorrect information ("hallucinations") or respond based on outdated, incomplete, or biased data. This means users should keep their guard up and double-check information with other sources if unsure. In addition, it should be effortless for users to immediately provide feedback about the quality of the model's response, such as a thumbs-up or thumbs-down. You can use the feedback information to monitor the state of the service and improve future versions of the model. Finally, we recommend to provide tips and best practices on writing effective prompts for the specific model to your users. Likewise, supplying examples and (prompt) templates for standard and more advanced queries is very valuable. This will help users get the most out of your service and improve satisfaction.

Summary

LLMs are here to stay, and many businesses have started exploring how their employees can use them but are not able or willing to expose their internal data to the outside in any way. This means that external services are off-limit but does not mean LLMs generally cannot be used.

The solution is to self-host the model within the company network. In this blog post, we have highlighted questions that should be answered when embarking on this journey. Note that we also addressed many of these questions in greater detail in previous blog posts (blueprint for our digital highway, observability, data reliability, testing and quality assurance).

If you are interested in a technical tutorial on deploying an LLM, have a look into our dedicated blog post, which will give a walk-through of our open-source implementation of an LLM service using an open-source LLM model.

In the meantime, don't hesitate to reach out to us with any questions!

Machine Learning Architects Basel

Machine Learning Architects Basel (MLAB) is a member of the Swiss Digital Network (SDN). Having pioneered the Digital Highway for End-to-End Machine Learning & Effective MLOps, we have created frameworks and reference models that combine our expertise in DataOps, Machine Learning, MLOps, and our extensive knowledge and experience in DevOps, SRE, and agile transformations. This expertise allows us to implement reliable LLMs in production and advise our customers to do the same.

If you want to learn more about how MLAB can aid your organization in creating long-lasting benefits by developing and maintaining reliable data and machine learning solutions, don't hesitate to contact us.